A cache block (or line) is the minimum unit of information that can be either present or not present in a cache.

A processor accesses data within a cache block through an addressing system. The smallest chunk of information that the processor can read is in units of words. If the size of the word is just ![]() byte, the system is called byte-addressable.

byte, the system is called byte-addressable.

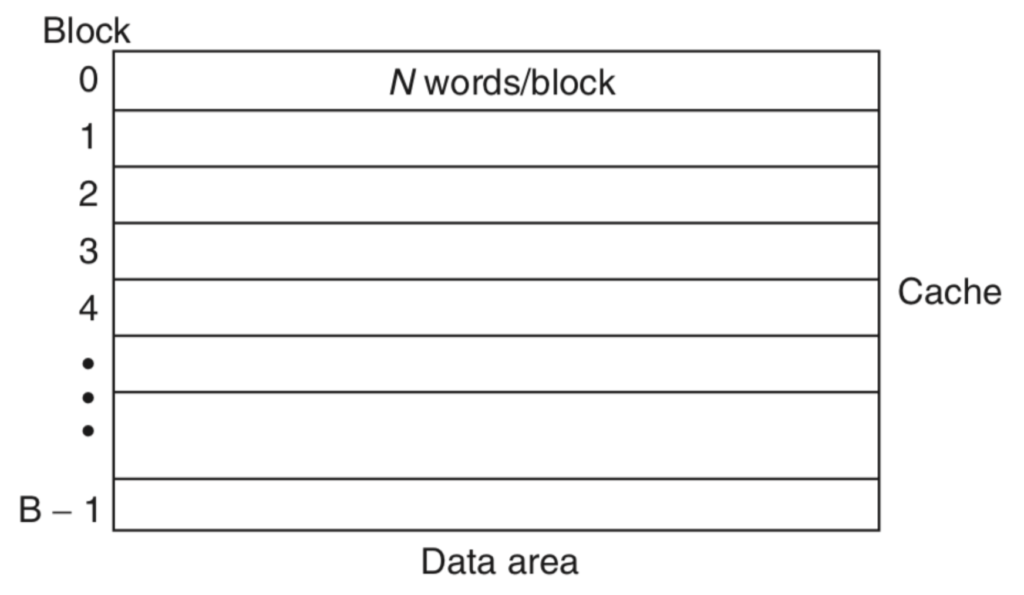

In the above figure the cache is divided into ![]() blocks. Each block is further divided into

blocks. Each block is further divided into ![]() words.

words.

Reducing Miss Penalty on Cache Miss

In the event of a cache miss, the processor needs to wait until an entire block in the cache is replaced from main memory. However, the data that is actually needed by the processor won’t be the entire block but some specific word(s) within this block. Thus, instead of waiting for the full block to be loaded, the processor can resume its operation as soon as the necessary word(s) are available.

Early Restart

In this optimization, the words in the block are fetched in the normal order (sequentially). However, as soon as the requested word of the block arrives, it is sent to the processor. The processor now continues execution. This mechanism is called early restart because the processor resumes execution before the entire cache block is loaded.

In the worst case, the word that is requested may be the last word that is fetched into a cache block. In this case, the processor has to wait until the entire block is loaded to access that particular word. This makes the optimization inefficient.

Critical Word First

The word that the processor needs is tagged as a critical word. This missing critical word is first fetched from memory and sent to the processor. The processor now continues execution. The rest of the words in the block are then fetched in the normal order (sequentially).

Example

Consider a ![]() -byte cache block. The size of a word is

-byte cache block. The size of a word is ![]() -bits. Thus, there are

-bits. Thus, there are ![]() words in a block. It takes

words in a block. It takes ![]() clock cycles to fetch the first word and

clock cycles to fetch the first word and ![]() clock cycles for every subsequent word.

clock cycles for every subsequent word.

With the Critical Word First optimization, it takes ![]() cycles to get the (first) critical word, and

cycles to get the (first) critical word, and ![]() cycles to fetch the remaining words. Thus, the processor resumes execution after 8 cycles and the cache block is replaced after

cycles to fetch the remaining words. Thus, the processor resumes execution after 8 cycles and the cache block is replaced after ![]() cycles.

cycles.

If the Critical Word First optimization is not in place, the word that the processor needs, in the worst case, can be the last word that is fetched into the cache block. It takes ![]() cycles to get the first word, followed by

cycles to get the first word, followed by ![]() cycles to fetch the remaining words. Thus, the processor may have to wait for

cycles to fetch the remaining words. Thus, the processor may have to wait for ![]() cycles before resuming execution.

cycles before resuming execution.

Observations

- Both the above optimizations, allow the processor to resume execution without waiting for the entire cache block to be fetched. Thus, a reduction in miss penalty is achieved.

- Given spatial locality, there is a good chance that the next reference is another word in the same block (which may or may not have been fetched yet). In this case, the effective miss penalty is the non-overlapped time from the initial reference until the second word arrives.

- This optimization generally benefits caches with a large block size. The benefits of the Critical Word First optimization is a tradeoff between the size of the block and the likelihood of another access to a word within the block that has not yet been fetched.

Pingback: Cache Optimizations that reduce Miss Penalty - TheBeardSage