The Markov Property makes a very strong assumption that the output of a random variable in a sequence only depends on the current state. Consider a sequence of state variables ![]() . The Markov assumption states that

. The Markov assumption states that

![]()

A discrete-time process satisfying this property is called a Markov Chain and a continuous process is called a Markov process.

Markov Chain

A Markov Chain is defined by a state space and the probabilities of transitioning within this state space. Given ![]() states there are

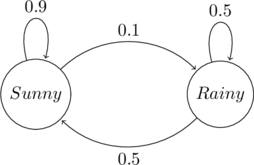

states there are ![]() possible transitions. This can be represented as a finite state machine with the symbols above the transition indicating the probability of that transition.

possible transitions. This can be represented as a finite state machine with the symbols above the transition indicating the probability of that transition.

Consider the discrete process of predicting the weather for a given day. The set of values it can take include ![]() . These correspond to the states. Each discrete quanta is one day.

. These correspond to the states. Each discrete quanta is one day.

The Markov assumption states that today’s weather depends only on yesterday’s weather. Assume that there is a high chance that today will be ![]() if yesterday was

if yesterday was ![]() and a moderate chance that today will be

and a moderate chance that today will be ![]() if yesterday was

if yesterday was ![]() . These values are added as probabilities. The outgoing probabilities of a state must add up to

. These values are added as probabilities. The outgoing probabilities of a state must add up to ![]() .

.

Using this Markov Chain, the weather can be predicted by following the transitions. A test run will result in the following output. ![]() stands for

stands for ![]() and

and ![]() stands for

stands for ![]()

![]()

Memoryless

The Markov property makes the model memoryless. The sequence of states generated are not context-sensitive and do not rely on the information captured in the full chain of prior states. For example, a Markov chain would do a poor job in mimicking the musical structure of a song. Higher order Markov chains use the assumption of the current state relying on multiple prior states.

Mathematical Representation

A Markov Chain can be mathematically represented as transition matrix. Given ![]() possible states, the matrix will have dimensions

possible states, the matrix will have dimensions ![]() , where each entry

, where each entry ![]() is the probability of transitioning from

is the probability of transitioning from ![]() to

to ![]() . Also, by the law of total probability

. Also, by the law of total probability

The Markov chain also has an initial state vector of dimensions ![]() , where each entry

, where each entry ![]() is the probability of starting at the

is the probability of starting at the ![]() -th state.

-th state.