The misses that arise from interprocessor communication, which are often called coherence misses, can be broken into two separate sources.

True Sharing

These are the misses that arise from the communication of data through the cache coherence mechanism. In an invalidation-based protocol, the first write by a processor to a shared cache block causes an invalidation to establish ownership of that block. Additionally, when another processor attempts to read a modified word in that cache block, a miss occurs and the resultant block is transferred. Both these misses are classified as true sharing misses since they directly arise from the sharing of data among processors.

False Sharing

False sharing occurs when a block is invalidated (and a subsequent reference causes a miss) because some word in the block, other than the one being read, is written into. If the word written into is actually used by the processor that received the invalidate, then the reference was a true sharing reference and would have caused a miss independent of the block size. If, however, the word being written and the word read are different and the invalidation does not cause a new value to be communicated, but only causes an extra cache miss, then it is a false sharing miss. In a false sharing miss, the block is shared, but no word in the cache is actually shared.

Example

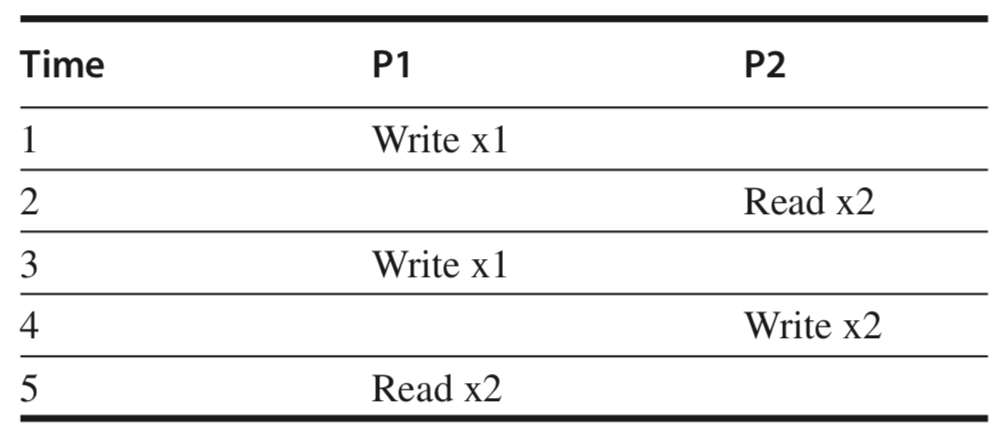

Assume that words x1 and x2 are in the same cache block, which is in the shared state in the caches of both P1 and P2. Assuming the following sequence of events, identify each miss as a true sharing miss, a false sharing miss, or a hit. Any miss that would occur if the block size were one word is designated a true sharing miss.

- This event is a true sharing miss, since x1 was read by P2 and needs to be invalidated from P2.

- This event is a false sharing miss, since x2 was invalidated by the write of x1 in P1, but that value of x1 is not used in P2.

- This event is a false sharing miss, since the block containing x1 is marked shared due to the read in P2, but P2 did not read x1. The cache block containing x1 will be in the shared state after the read by P2; a write miss is required to obtain exclusive access to the block. In some protocols this will be handled as an upgrade request, which generates a bus invalidate, but does not transfer the cache block.

- This event is a false sharing miss for the same reason as step 3.

- This event is a true sharing miss, since the value being read was written by P2.

Ping-Ponging

Cache line ping-ponging is the effect where a cache line is transferred between multiple CPUs (or cores) in rapid succession. This can be cause by either false or true sharing. Essentially if multiple CPUs are trying to read and write data in the same cache line then that cache line might have to be transferred between the two threads in rapid succession, and this can cause a significant performance degradation (possibly even worse performance than if a single thread were executing). False sharing can make this problem particularly difficult to detect, because a programmer might have tried to write an application so that the threads weren’t sharing data, without realizing that the data was mapped to the same cache line. But false sharing is not the only possible cause of cache-line ping-ponging. This could also be caused by true sharing where multiple threads are trying to read and write the same data.