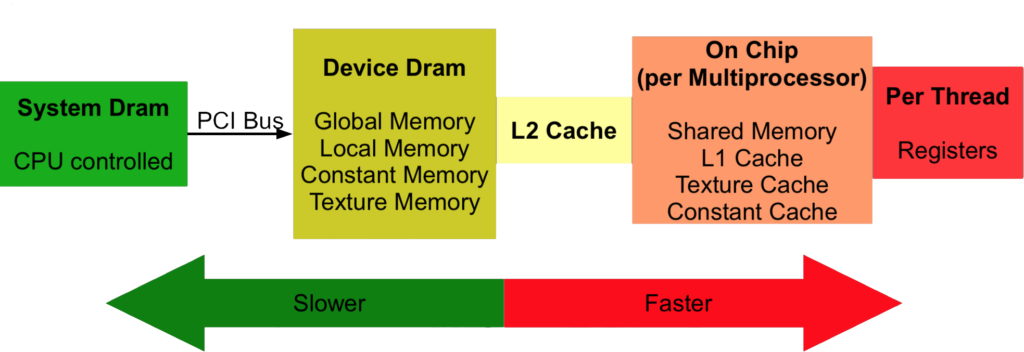

The CPU and GPU have separate memory spaces. This means that data that is processed by the GPU must be moved from the CPU to the GPU before the computation starts, and the results of the computation must be moved back to the CPU once processing has completed.

Overview

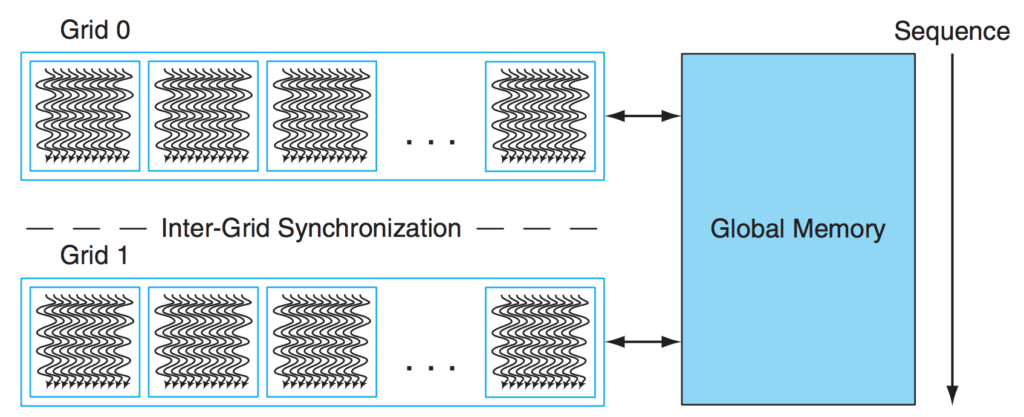

Global Memory

There’s a large amount of global memory. It’s slower to access than other memories like shared and registers. All running threads can read and write global memory and so can the CPU. The functions cudaMalloc, cudaFree, cudaMemcpy and cudaMemset all deal with global memory. Global memory is allocated and deallocated by the host.

This is the main memory store of the GPU, every byte is addressable. It is persistent across kernel calls.

Constant Memory

This memory is also part of the GPU’s main memory. It has its own cache. Not related to the L1 and L2 of global memory. All threads have access to the same constant memory but they can only read, they can’t write to it. The CPU sets the values in constant memory before launching the kernel.

It is very fast (register and shared memory speeds) if all running threads in a warp read exactly the same address. It’s small, there’s only 64k of constant memory!

All running threads share constant memory. In graphics programming, this memory holds the constants like the model, view and projection matrices.

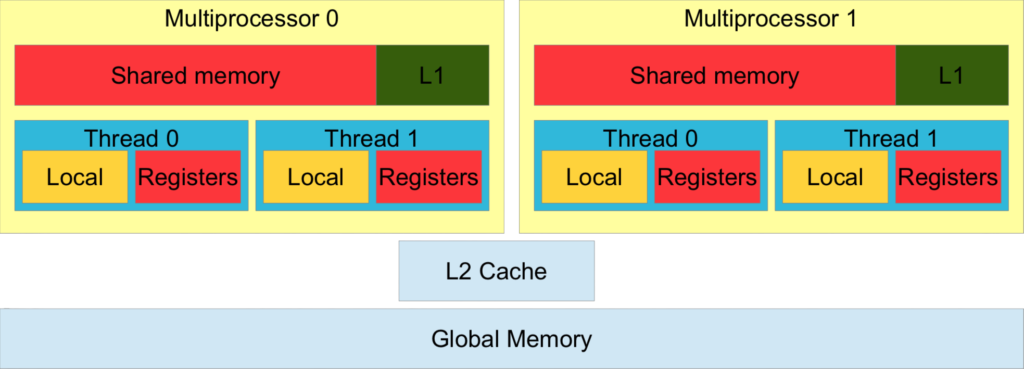

Shared Memory

Shared memory is very fast (register speeds). Shared memory is used to enable fast communication between threads in a block. Shared memory only exists for the lifetime of the block.

Bank conflicts can slow access down. It’s fastest when all threads read from different banks or all threads of a warp read exactly the same value. Bank conflicts are only possible within a warp. No bank conflicts occur between different warps.

Caches

In some architectures, there’s also an L1 cache per multiprocessor and an L2 cache which is shared between all multiprocessors. Global and local memory use these.

The L1 and shared memory are actually the same bytes. The L1 is very fast (register speeds).

All global memory accesses go through the L2 cache, including those by the CPU.

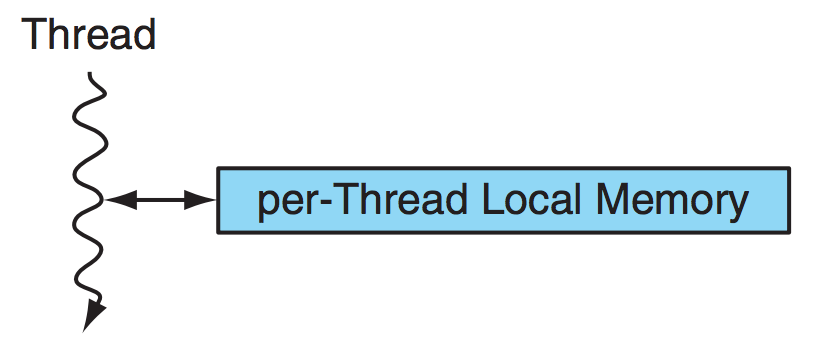

Local Memory

This is also part of the main memory of the GPU (same as the global memory) so it’s generally slow. Local memory is used automatically by threads when we run out of registers or when registers cannot be used. This is called register spilling. It happens if there’s too many variables per thread to use registers or if kernels use structures. Also, arrays that aren’t indexed with constants use local memory since registers don’t have addresses, a memory space that’s addressable must be used. The scope for local memory is per thread.

Local memory is cached in an L1 then an L2 cache so register spilling may not mean a dramatic performance decrease.

Registers

Registers are the fastest memory on the GPU. The variables we declare in a kernel will use registers unless we run out or they can’t be stored in registers, then local memory will be used.

Register scope is per thread. Unlike the CPU, there’s thousands of registers in a GPU. Carefully selecting a few registers can easily double the number of concurrent blocks the GPU can execute and therefore increase performance substantially.

Memory Access Speed

Summary

| Memory | Access | Scope | Lifetime | Speed | Note |

|---|---|---|---|---|---|

| Global | RW | All threads and CPU | All | Slow, cached | Large |

| Constant | R | All threads and CPU | All | Slow, cached | Read same address |

| Local | RW | Per thread | Thread | Slow, cached | Register Spilling |

| Shared | RW | Per block | Block | Fast | Fast communication between threads |

| Registers | RW | Per thread | Thread | Fast | Don’t use too many |