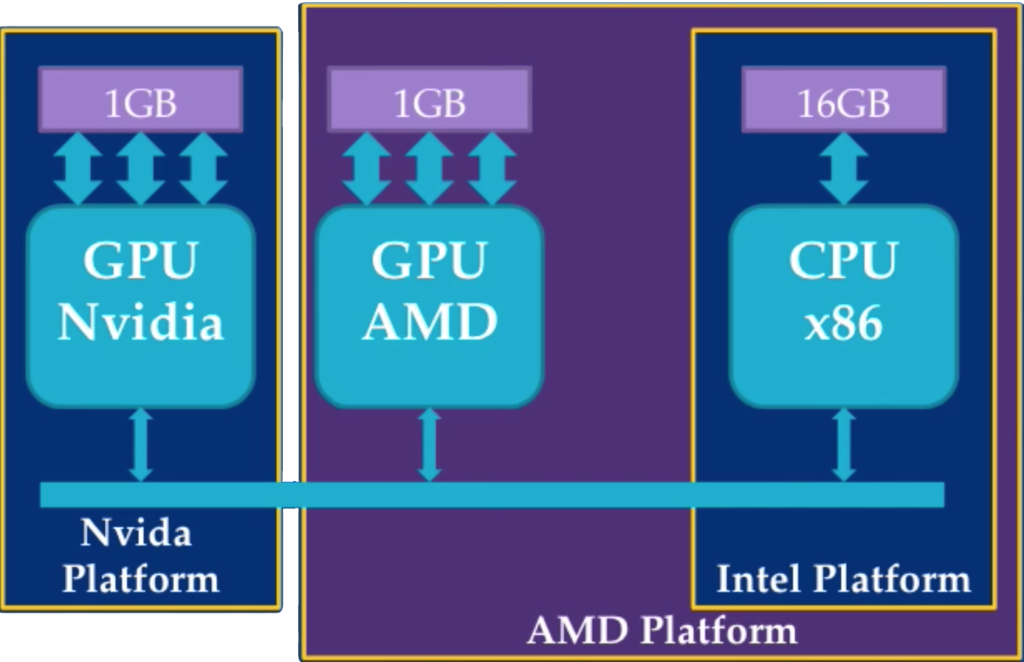

Platforms

A platform is a collection of devices. The platform determines how data can be shared efficiently. If the platform supports both CPU and GPU the vendor would have optimized data flow between the two devices. Data sharing within a platform is more efficient than across platforms.

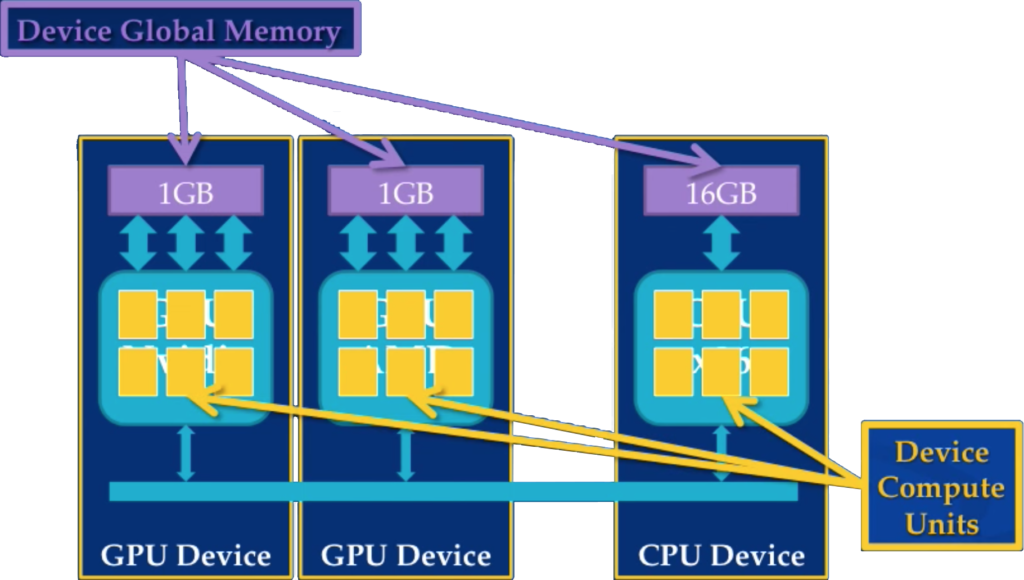

Devices

The individual CPU/GPU are called devices. The CPU device can be shared as both an OpenCL device and the host processor. Devices (CPU/GPU) are connected via a bus. Each device has a memory attached to it limited by its peak bandwidth (arrows).

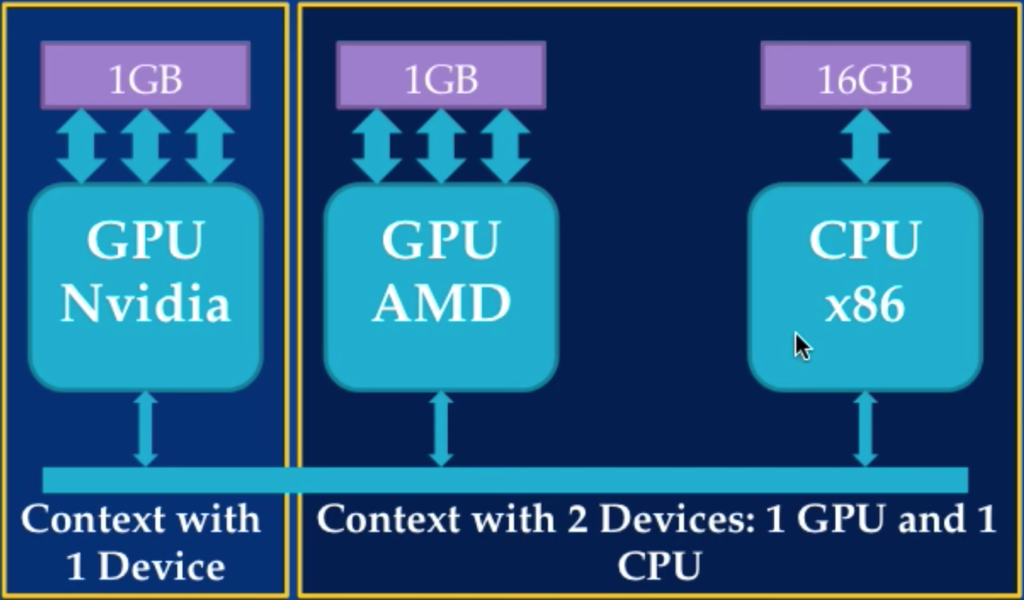

Context

The context is the environment within which the kernels execute. This environment includes

- A set of devices. All devices in a context must be in the same platform.

- The memory accessible to those devices

- One or more command-queues used to schedule execution of a kernel(s) or operations on memory objects.

Contexts are used to contain and manage the state of the world in OpenCL. This includes

- Kernel execution commands

- Memory commands – transfer or mapping of memory object data

- Synchronization commands – constrains the order of commands

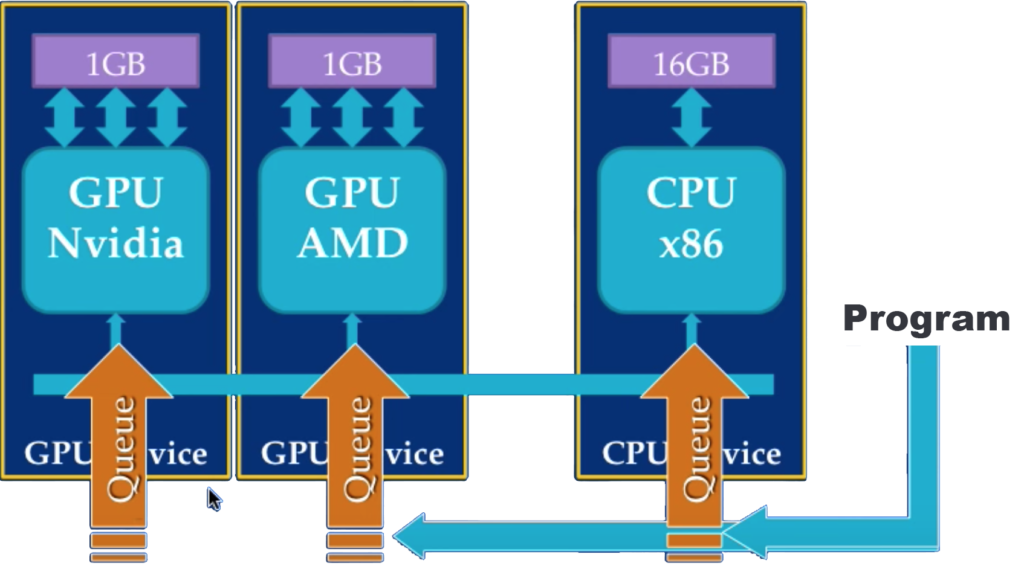

Command Queues

To submit work to a device a command queue has to be created. The program can put work into this queue and eventually will make it to the top of the queue and get executed on the device. To execute on another device a new command queue has to be created. Thus a command queue is needed for every device. This means there is no automatic distribution of work across devices. Each device can run the same kernel, but may not get optimal performance (CPU vs GPU).

Each Command-queue points to a single device within a context. A single device can simultaneously be attached to multiple command queues. Both in-order and out-of-order queues.

Data Movement

No automatic data movement. The user gets full control of performance and must explicitly

- Allocate global data

- Write to it from the host

- Allocate local data

- Copy data from global to local (and back)

OpenCL Program

- Setup

- Get the devices (and platform)

- Create a context (for sharing between devices)

- Create command queues (for submitting work)

- Compilation

- Create a program

- Build the program (compile)

- Create kernels

- Create memory Objects

- Enqueue writes to copy data to the GPU

- Set the kernel arguments

- Enqueue kernel executions

- Enqueue reads to copy data back from the GPU

- Wait for your commands to finish

- Clean up OpenCL resources

OpenCL is asynchronous. When we enqueue a command we have no idea when it will finish. By explicitly waiting we make sure it is finished before continuing.