Fundamentals

A random variable is denoted in capital, ![]() and the values it can take is denoted in small

and the values it can take is denoted in small ![]() .

.

Consider a collection of ![]() random variables

random variables ![]() . Random variables can be thought of as features of a particular domain of interest.

. Random variables can be thought of as features of a particular domain of interest.

For example, the result of a coin toss can be represented using a single random variable, ![]() . This variable can take either of the categorical values

. This variable can take either of the categorical values ![]() or

or ![]() . If the same coin is tossed

. If the same coin is tossed ![]() times, this can be represented using

times, this can be represented using ![]() variables

variables ![]() . Each of these values can be either

. Each of these values can be either ![]() or

or ![]() .

.

Joint Probability

An expression of the form ![]() is called a joint probability function over the variables

is called a joint probability function over the variables ![]() . The joint probability is defined when the values of

. The joint probability is defined when the values of ![]() are

are ![]() respectively. This is denoted by the expression

respectively. This is denoted by the expression

This is sometimes abbreviated as ![]() .

.

For a fair coin toss, ![]() . If a fair coin is tossed five times,

. If a fair coin is tossed five times, ![]() .

.

The joint probability function satisfies

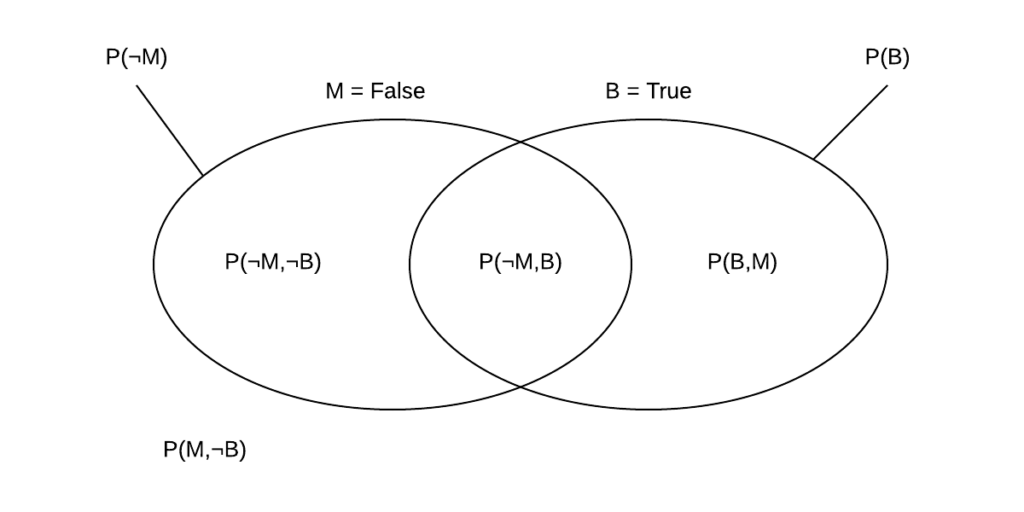

Marginal Probability

The marginal probability of one of the random variables can be computed if the values of all of the joint probabilities for a set of random variables are known.

For example, the marginal probability ![]() is defined to be the sum of all those joint probabilities for which

is defined to be the sum of all those joint probabilities for which ![]()

When dealing with propositional variables (True/False) ![]() True

True![]() False

False![]() is denoted as

is denoted as ![]() .

.

Conditional Probabilities

The conditional probability of ![]() given

given ![]() is denoted by

is denoted by ![]() .

.

where ![]() is the joint probability of

is the joint probability of ![]() and

and ![]() and

and ![]() is the marginal probability of

is the marginal probability of ![]() . Thus

. Thus

Joint conditional probabilities of several variables conditioned on several other variables is expressed as

.

.A joint probability can be expressed in terms of a chain of conditional probabilities.

The general form of this chain rule is

Bayes Rule

Different possible orders give different expressions but they all have the same value for the same set of variable values. Since the order of variables is not important

Which gives Bayes’ Rule

Probabilistic Inference

In set notation ![]() ,

, ![]() , The variables

, The variables ![]() having the values

having the values ![]() respectively is denoted by

respectively is denoted by ![]() , where

, where ![]() and

and ![]() are ordered lists.

are ordered lists.

For a set ![]() , the variables in a subset

, the variables in a subset ![]() of

of ![]() are given as evidence.

are given as evidence.

For example, consider ![]() . The evidence

. The evidence ![]() is

is ![]() being false. In other words

being false. In other words ![]() equates to

equates to ![]() .

.

Thus ![]() need not be computed.

need not be computed.

Conditional Independence

A variable ![]() is conditionally independent of a set of variables

is conditionally independent of a set of variables ![]() given a set

given a set ![]() if

if

(1)

![]() tells nothing more about

tells nothing more about ![]() than is already known by knowing

than is already known by knowing ![]() .

.

(2)

Saying that ![]() is conditionally independent of

is conditionally independent of ![]() given

given ![]() also means that

also means that ![]() is conditionally independent of

is conditionally independent of ![]() given

given ![]() . The same result also applies to sets

. The same result also applies to sets ![]() and

and ![]() .

.

As a generalization of pairwise independence, the variables ![]() are mutually conditionally independent, given a set

are mutually conditionally independent, given a set ![]() if each of the variables is conditionally independent of all of the others given

if each of the variables is conditionally independent of all of the others given ![]() .

.

(3)

When ![]() is empty

is empty

This implies that the variables are unconditionally independent.

Thank You

- Mark – for pointing out typos and errors

Another great article! Nice, clear explanations.

I do have some suggestions for improvement though:

It seems there is an error in the Bayes Rule section. It should be P(V_i|V_j) = P(V_j|V_i)P(V_i)/P(V_j)

I would also recommend explaining how you introduced the P and not P random variables in the Probabilistic Inference section as it is not quite clear how that works out.

Finally, it seems your Latex implementation had trouble rendering for the fourth paragraph in the Conditional Independence section (\textbf{mutually conditionally independent}), right above equation (3).

I have expanded the Probabilistic Inference section and fixed the errors you have pointed out. Thank you.